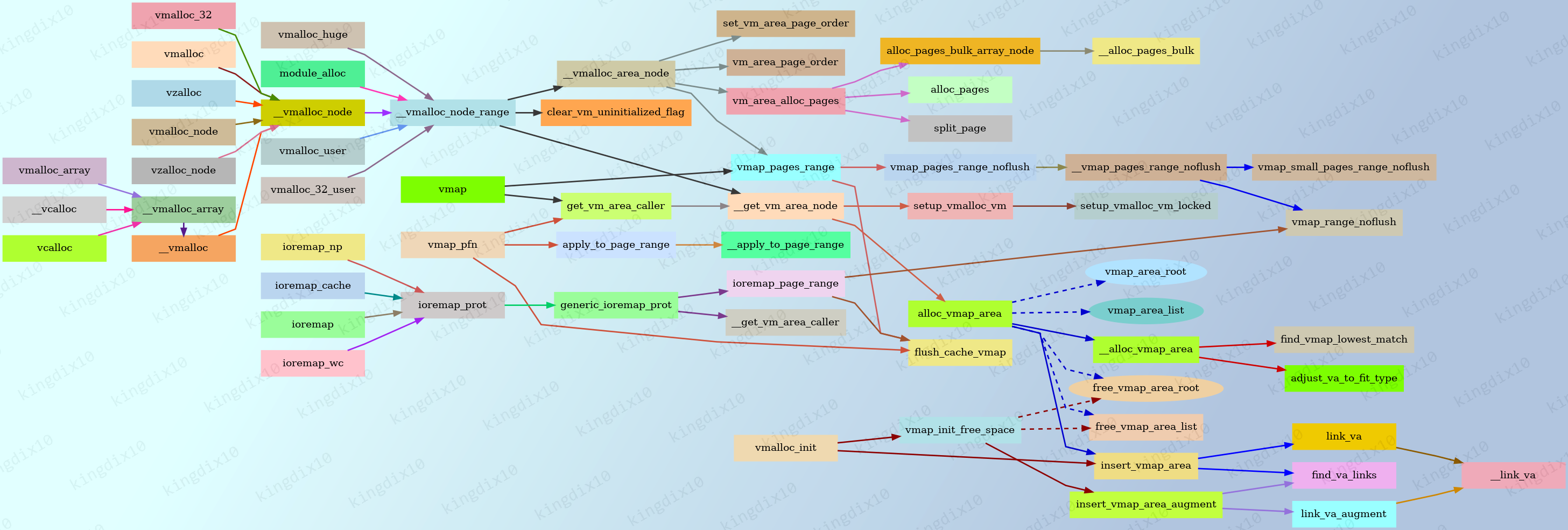

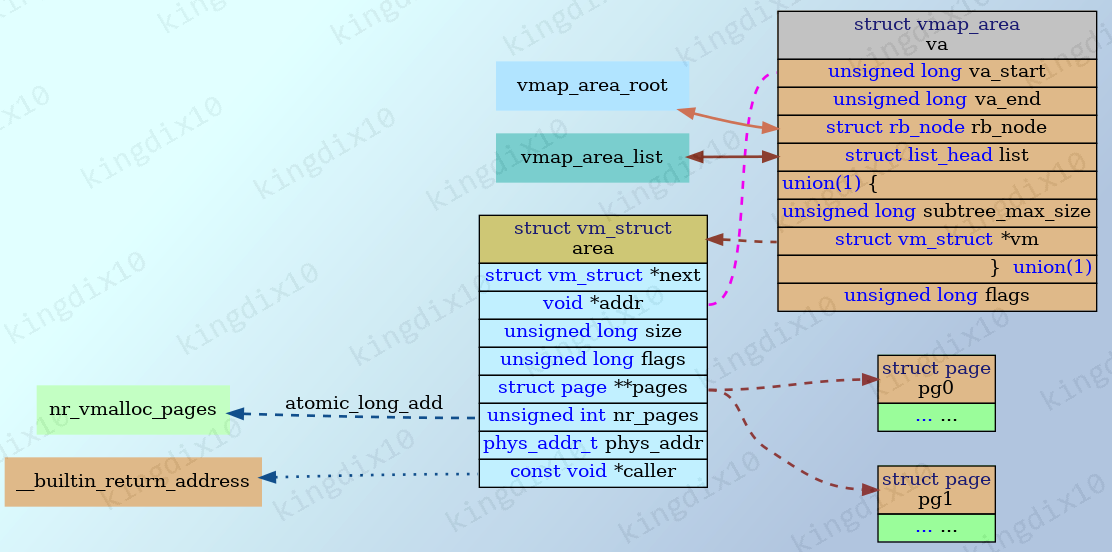

1. 全局数据结构

1/// mm/vmalloc.c

2/* Export for kexec only */

3LIST_HEAD(vmap_area_list);

4static struct rb_root vmap_area_root = RB_ROOT;

5/// ... ...

6

7/*

8 * This kmem_cache is used for vmap_area objects. Instead of

9 * allocating from slab we reuse an object from this cache to

10 * make things faster. Especially in "no edge" splitting of

11 * free block.

12 */

13static struct kmem_cache *vmap_area_cachep;

14

15/*

16 * This linked list is used in pair with free_vmap_area_root.

17 * It gives O(1) access to prev/next to perform fast coalescing.

18 */

19static LIST_HEAD(free_vmap_area_list);

20

21/*

22 * This augment red-black tree represents the free vmap space.

23 * All vmap_area objects in this tree are sorted by va->va_start

24 * address. It is used for allocation and merging when a vmap

25 * object is released.

26 *

27 * Each vmap_area node contains a maximum available free block

28 * of its sub-tree, right or left. Therefore it is possible to

29 * find a lowest match of free area.

30 */

31static struct rb_root free_vmap_area_root = RB_ROOT;

vmalloc管理的内存区域使用struct vmap_area描述,vmap_area_cachep用于分配struct vmap_area对象。

free_vmap_area_root和free_vmap_area_list用于记录空闲区域。vmap_area_root和vmap_area_list用于记录已分配的区域。

2. 初始化流程

1/// mm/vmalloc.c

2void __init vmalloc_init(void)

3{

4 struct vmap_area *va;

5 struct vm_struct *tmp;

6 int i;

7

8 /*

9 * Create the cache for vmap_area objects.

10 */

11 vmap_area_cachep = KMEM_CACHE(vmap_area, SLAB_PANIC);

12

13 for_each_possible_cpu(i) {

14 struct vmap_block_queue *vbq;

15 struct vfree_deferred *p;

16

17 vbq = &per_cpu(vmap_block_queue, i);

18 spin_lock_init(&vbq->lock);

19 INIT_LIST_HEAD(&vbq->free);

20 p = &per_cpu(vfree_deferred, i);

21 init_llist_head(&p->list);

22 INIT_WORK(&p->wq, delayed_vfree_work);

23 xa_init(&vbq->vmap_blocks);

24 }

25

26 /// 内核镜像位于vmalloc区域,将内核镜像加入到vmap_area_list中,

27 /// 调用vmalloc就不会分配这片区域,除此之外,percpu可能也需要在vmalloc区域占用部分空间

28 /* Import existing vmlist entries. */

29 for (tmp = vmlist; tmp; tmp = tmp->next) {

30 va = kmem_cache_zalloc(vmap_area_cachep, GFP_NOWAIT);

31 if (WARN_ON_ONCE(!va))

32 continue;

33

34 va->va_start = (unsigned long)tmp->addr;

35 va->va_end = va->va_start + tmp->size;

36 va->vm = tmp;

37 insert_vmap_area(va, &vmap_area_root, &vmap_area_list);

38 }

39

40 /*

41 * Now we can initialize a free vmap space.

42 */

43 /// 向free_vmap_area_root和free_vmap_area_list中添加空闲区域

44 vmap_init_free_space();

45 vmap_initialized = true;

46}

2.1. 内核镜像区域处理

1+-- setup_arch

2| +-- paging_init

3| | +-- map_kernel

4| | | +-- vm_area_add_early

2.2. vmap_init_free_space

1// mm/vmalloc.c

2static void vmap_init_free_space(void)

3{

4 unsigned long vmap_start = 1;

5 const unsigned long vmap_end = ULONG_MAX;

6 struct vmap_area *busy, *free;

7

8 /*

9 * B F B B B F

10 * -|-----|.....|-----|-----|-----|.....|-

11 * | The KVA space |

12 * |<--------------------------------->|

13 */

14 list_for_each_entry(busy, &vmap_area_list, list) {

15 if (busy->va_start - vmap_start > 0) {

16 free = kmem_cache_zalloc(vmap_area_cachep, GFP_NOWAIT);

17 if (!WARN_ON_ONCE(!free)) {

18 free->va_start = vmap_start;

19 free->va_end = busy->va_start;

20

21 insert_vmap_area_augment(free, NULL,

22 &free_vmap_area_root,

23 &free_vmap_area_list);

24 }

25 }

26

27 vmap_start = busy->va_end;

28 }

29

30 if (vmap_end - vmap_start > 0) {

31 free = kmem_cache_zalloc(vmap_area_cachep, GFP_NOWAIT);

32 if (!WARN_ON_ONCE(!free)) {

33 free->va_start = vmap_start;

34 free->va_end = vmap_end;

35

36 insert_vmap_area_augment(free, NULL,

37 &free_vmap_area_root,

38 &free_vmap_area_list);

39 }

40 }

41}

3. 申请流程

1/// Allocate physical pages and map them into vmalloc space.

2static void *__vmalloc_area_node(struct vm_struct *area, gfp_t gfp_mask,

3 pgprot_t prot, unsigned int page_shift,

4 int node)

5{

6 /// ... ...

7 area = __get_vm_area_node(real_size, align, shift, VM_ALLOC |

8 VM_UNINITIALIZED | vm_flags, start, end, node,

9 gfp_mask, caller);

10 /// ... ...

11 area->nr_pages = vm_area_alloc_pages(gfp_mask | __GFP_NOWARN,

12 node, page_order, nr_small_pages, area->pages);

13 /// ... ...

14 do {

15 ret = vmap_pages_range(addr, addr + size, prot, area->pages,

16 page_shift);

17 if (nofail && (ret < 0))

18 schedule_timeout_uninterruptible(1);

19 } while (nofail && (ret < 0));

20 /// ... ...

21}

alloc_vmap_area -> __alloc_vmap_area -> find_vmap_lowest_match将struct vmap_area从free_vmap_area_root和free_vmap_area_list中删除。

中间可能涉及将va进行分割,分割后的空闲va加入到free_vmap_area_root和free_vmap_area_list中。

分配成功后,alloc_vmap_area将va加入到vmap_area_root和vmap_area_list中。

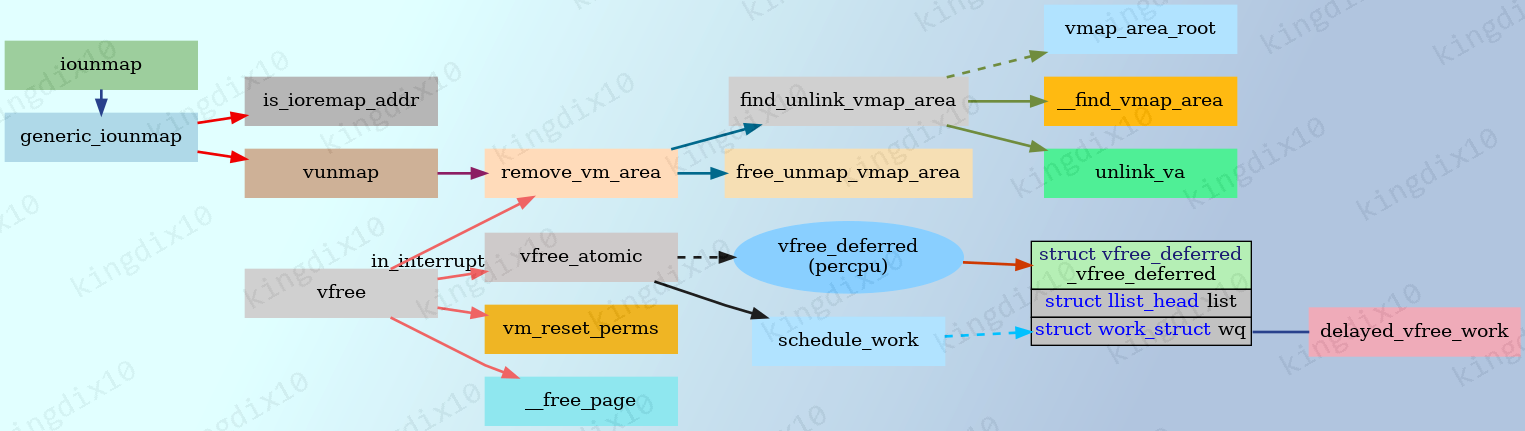

4. vfree

1/// mm/vmalloc.c

2/**

3 * vfree_atomic - release memory allocated by vmalloc()

4 * @addr: memory base address

5 *

6 * This one is just like vfree() but can be called in any atomic context

7 * except NMIs.

8 */

9void vfree_atomic(const void *addr)

10{

11 struct vfree_deferred *p = raw_cpu_ptr(&vfree_deferred);

12

13 BUG_ON(in_nmi());

14 kmemleak_free(addr);

15

16 /*

17 * Use raw_cpu_ptr() because this can be called from preemptible

18 * context. Preemption is absolutely fine here, because the llist_add()

19 * implementation is lockless, so it works even if we are adding to

20 * another cpu's list. schedule_work() should be fine with this too.

21 */

22 if (addr && llist_add((struct llist_node *)addr, &p->list))

23 schedule_work(&p->wq);

24}

5. 调试接口

1cat /proc/vmallocinfo