1. 说明

内核模式驱动:linux-6.6/drivers/accel/ivpu/

用户模式驱动:https://github.com/intel/linux-npu-driver/releases/tag/v1.2.0

用户模式库:https://github.com/intel/intel-npu-acceleration-library

14代intel处理器内置了NPU,使用了drm来管理内存。drm内存管理核心部分如下:

- drm_mm_init

- buffer_object

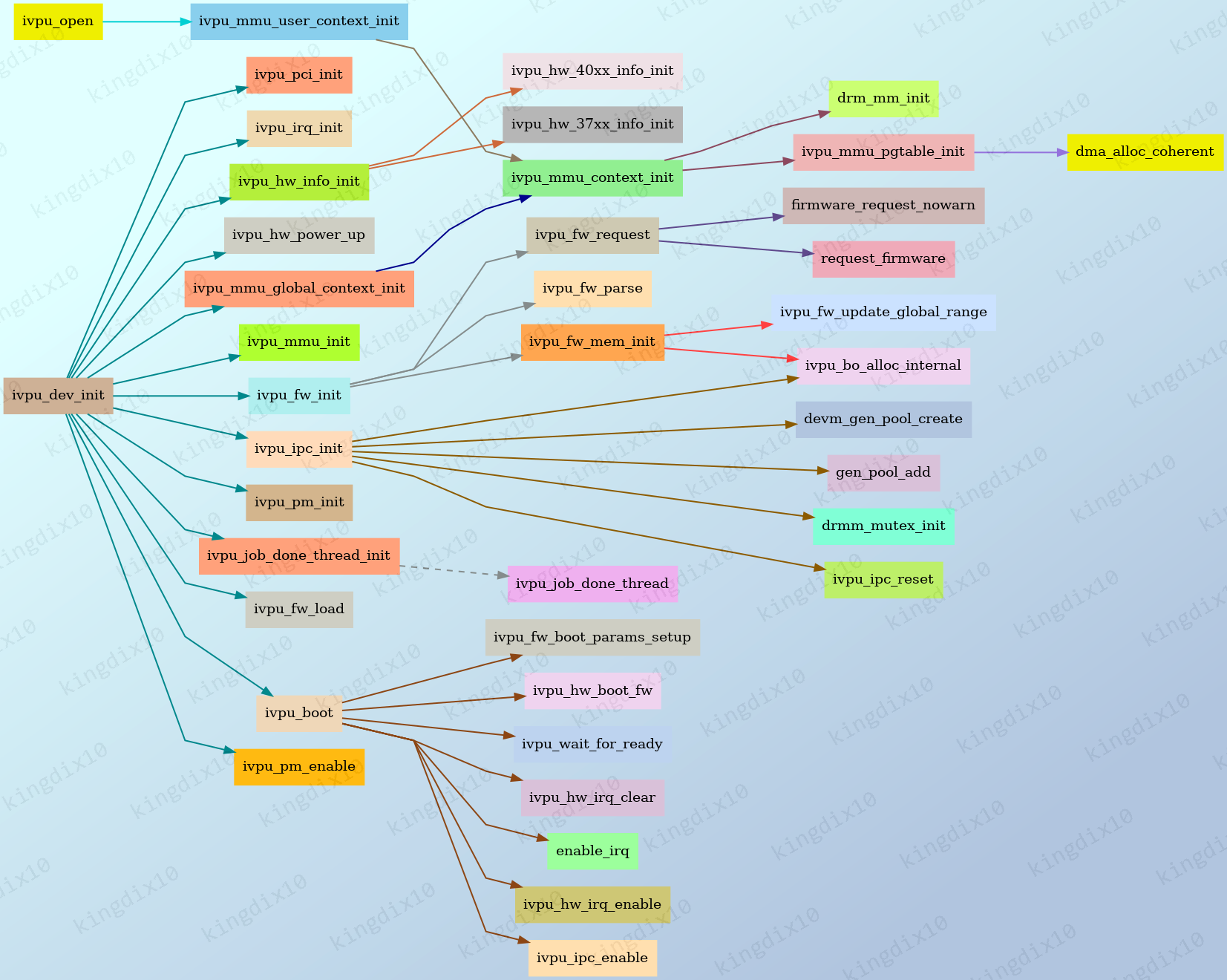

2. drm内存管理初始化

ivpu_mmu_context_init调用drm_mm_init将需要管理的内存范围添加到drm内存管理器。

1/// linux-6.6/drivers/accel/ivpu/ivpu_mmu_context.c

2static int

3ivpu_mmu_context_init(struct ivpu_device *vdev, struct ivpu_mmu_context *ctx, u32 context_id)

4{

5 u64 start, end;

6 int ret;

7

8 mutex_init(&ctx->lock);

9 INIT_LIST_HEAD(&ctx->bo_list);

10

11 ret = ivpu_mmu_pgtable_init(vdev, &ctx->pgtable);

12 if (ret)

13 return ret;

14

15 if (!context_id) {

16 start = vdev->hw->ranges.global.start;

17 end = vdev->hw->ranges.shave.end;

18 } else {

19 start = vdev->hw->ranges.user.start;

20 end = vdev->hw->ranges.dma.end;

21 }

22

23 drm_mm_init(&ctx->mm, start, end - start);

24 ctx->id = context_id;

25

26 return 0;

27}

设备内存区域初始化。

1/// drivers/accel/ivpu/ivpu_hw_40xx.c

2static int ivpu_hw_40xx_info_init(struct ivpu_device *vdev)

3{

4 /// ... ...

5 ivpu_pll_init_frequency_ratios(vdev);

6

7 ivpu_hw_init_range(&vdev->hw->ranges.global, 0x80000000, SZ_512M);

8 ivpu_hw_init_range(&vdev->hw->ranges.user, 0x80000000, SZ_256M);

9 ivpu_hw_init_range(&vdev->hw->ranges.shave, 0x80000000 + SZ_256M, SZ_2G - SZ_256M);

10 ivpu_hw_init_range(&vdev->hw->ranges.dma, 0x200000000, SZ_8G);

11

12 return 0;

13}

3. 文件操作

1/// drivers/accel/ivpu/ivpu_drv.c

2static const struct file_operations ivpu_fops = {

3 .owner = THIS_MODULE,

4 DRM_ACCEL_FOPS,

5};

6

7static const struct drm_driver driver = {

8 .driver_features = DRIVER_GEM | DRIVER_COMPUTE_ACCEL,

9

10 .open = ivpu_open,

11 .postclose = ivpu_postclose,

12 .gem_prime_import = ivpu_gem_prime_import,

13

14#if defined(CONFIG_DEBUG_FS)

15 .debugfs_init = ivpu_debugfs_init,

16#endif

17

18 .ioctls = ivpu_drm_ioctls,

19 .num_ioctls = ARRAY_SIZE(ivpu_drm_ioctls),

20 .fops = &ivpu_fops,

21

22 .name = DRIVER_NAME,

23 .desc = DRIVER_DESC,

24 .date = DRIVER_DATE,

25 .major = DRM_IVPU_DRIVER_MAJOR,

26 .minor = DRM_IVPU_DRIVER_MINOR,

27};

3.1. open

ivpu_open会申请新的struct ivpu_file_priv,也就是每次open都会创建新的struct ivpu_mmu_context,且其id从2开始。

1/// drivers/accel/ivpu/ivpu_drv.h

2#define IVPU_GLOBAL_CONTEXT_MMU_SSID 0

3/* SSID 1 is used by the VPU to represent invalid context */

4#define IVPU_USER_CONTEXT_MIN_SSID 2

5#define IVPU_USER_CONTEXT_MAX_SSID (IVPU_USER_CONTEXT_MIN_SSID + 63)

6/// ... ...

7/*

8 * file_priv has its own refcount (ref) that allows user space to close the fd

9 * without blocking even if VPU is still processing some jobs.

10 */

11struct ivpu_file_priv {

12 struct kref ref;

13 struct ivpu_device *vdev;

14 struct mutex lock; /* Protects cmdq */

15 struct ivpu_cmdq *cmdq[IVPU_NUM_ENGINES];

16 struct ivpu_mmu_context ctx;

17 u32 priority;

18 bool has_mmu_faults;

19};

struct ivpu_mmu_context有个struct drm_mm,struct drm_mm管理的范围是user.start - dma.end。

1/// drivers/accel/ivpu/ivpu_mmu_context.h

2struct ivpu_mmu_context {

3 struct mutex lock; /* protects: mm, pgtable, bo_list */

4 struct drm_mm mm;

5 struct ivpu_mmu_pgtable pgtable;

6 struct list_head bo_list;

7 u32 id;

8};

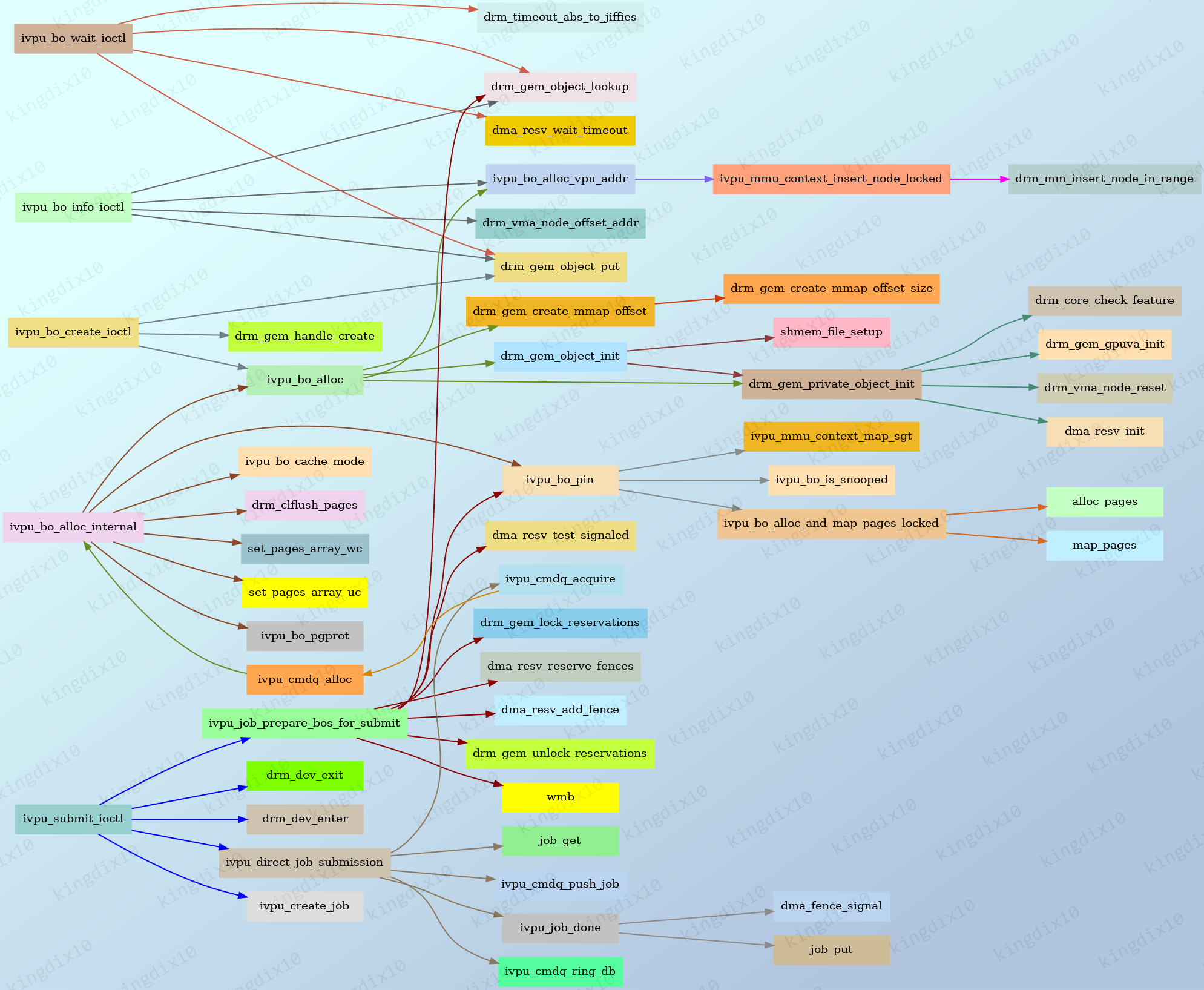

3.2. ioctl

ivpu常用的关于buffer object的ioctl如下:

1/// drivers/accel/ivpu/ivpu_drv.c

2static const struct drm_ioctl_desc ivpu_drm_ioctls[] = {

3 DRM_IOCTL_DEF_DRV(IVPU_GET_PARAM, ivpu_get_param_ioctl, 0),

4 DRM_IOCTL_DEF_DRV(IVPU_SET_PARAM, ivpu_set_param_ioctl, 0),

5 DRM_IOCTL_DEF_DRV(IVPU_BO_CREATE, ivpu_bo_create_ioctl, 0),

6 DRM_IOCTL_DEF_DRV(IVPU_BO_INFO, ivpu_bo_info_ioctl, 0),

7 DRM_IOCTL_DEF_DRV(IVPU_SUBMIT, ivpu_submit_ioctl, 0),

8 DRM_IOCTL_DEF_DRV(IVPU_BO_WAIT, ivpu_bo_wait_ioctl, 0),

9};

4. mmap

默认使用drm_gem_mmap。

1/// include/drm/drm_accel.h

2/**

3 * DRM_ACCEL_FOPS - Default drm accelerators file operations

4 *

5 * This macro provides a shorthand for setting the accelerator file ops in the

6 * &file_operations structure. If all you need are the default ops, use

7 * DEFINE_DRM_ACCEL_FOPS instead.

8 */

9#define DRM_ACCEL_FOPS \

10 .open = accel_open,\

11 .release = drm_release,\

12 .unlocked_ioctl = drm_ioctl,\

13 .compat_ioctl = drm_compat_ioctl,\

14 .poll = drm_poll,\

15 .read = drm_read,\

16 .llseek = noop_llseek, \

17 .mmap = drm_gem_mmap

5. 用户程序

VPUDriverApi主要对各个文件操作进行封装,如ioctl、mmap。

1/// linux-npu-driver/umd/vpu_driver/source/os_interface/vpu_driver_api.cpp

2int VPUDriverApi::wait(void *args) const {

3 return doIoctl(DRM_IOCTL_IVPU_BO_WAIT, args);

4}

5

6int VPUDriverApi::closeBuffer(uint32_t handle) const {

7 struct drm_gem_close args = {.handle = handle, .pad = 0};

8 return doIoctl(DRM_IOCTL_GEM_CLOSE, &args);

9}

10

11int VPUDriverApi::createBuffer(size_t size,

12 uint32_t flags,

13 uint32_t &handle,

14 uint64_t &vpuAddr) const {

15 drm_ivpu_bo_create args = {};

16 args.size = size;

17 args.flags = flags;

18

19 int ret = doIoctl(DRM_IOCTL_IVPU_BO_CREATE, &args);

20 if (ret) {

21 if (errno == ENOSPC) {

22 LOG_E("Buffer size is too big.");

23 }

24

25 LOG_E("Failed to call DRM_IOCTL_IVPU_BO_CREATE");

26 return ret;

27 }

28

29 handle = args.handle;

30 vpuAddr = args.vpu_addr;

31 return ret;

32}

33

34int VPUDriverApi::getBufferInfo(uint32_t handle, uint64_t &mmap_offset) const {

35 drm_ivpu_bo_info args = {};

36 args.handle = handle;

37

38 int ret = doIoctl(DRM_IOCTL_IVPU_BO_INFO, &args);

39 if (ret) {

40 LOG_E("Failed to call DRM_IOCTL_IVPU_BO_INFO");

41 return ret;

42 }

43

44 mmap_offset = args.mmap_offset;

45 return ret;

46}

47

48int VPUDriverApi::getExtBufferInfo(uint32_t handle,

49 uint32_t &flags,

50 uint64_t &vpu_address,

51 uint64_t &size,

52 uint64_t &mmap_offset) const {

53 drm_ivpu_bo_info args = {};

54 args.handle = handle;

55

56 int ret = doIoctl(DRM_IOCTL_IVPU_BO_INFO, &args);

57 if (ret) {

58 LOG_E("Failed to call DRM_IOCTL_IVPU_BO_INFO");

59 return ret;

60 }

61

62 flags = args.flags;

63 vpu_address = args.vpu_addr;

64 size = args.size;

65 mmap_offset = args.mmap_offset;

66 return ret;

67}

68

69int VPUDriverApi::exportBuffer(uint32_t handle, uint32_t flags, int32_t &fd) const {

70 drm_prime_handle args = {.handle = handle, .flags = flags, .fd = -1};

71

72 int ret = doIoctl(DRM_IOCTL_PRIME_HANDLE_TO_FD, &args);

73 if (ret) {

74 LOG_E("Failed to call DRM_IOCTL_PRIME_HANDLE_TO_FD");

75 return ret;

76 }

77

78 fd = args.fd;

79 return ret;

80}

81

82int VPUDriverApi::importBuffer(int32_t fd, uint32_t flags, uint32_t &handle) const {

83 drm_prime_handle args = {.handle = 0, .flags = flags, .fd = fd};

84

85 int ret = doIoctl(DRM_IOCTL_PRIME_FD_TO_HANDLE, &args);

86 if (ret) {

87 LOG_E("Failed to call DRM_IOCTL_PRIME_FD_TO_HANDLE");

88 return ret;

89 }

90

91 handle = args.handle;

92 return ret;

93}

94

95void *VPUDriverApi::mmap(size_t size, off_t offset) const {

96 void *ptr = osInfc.osiMmap(nullptr, size, PROT_READ | PROT_WRITE, MAP_SHARED, vpuFd, offset);

97 if (ptr == MAP_FAILED) {

98 LOG_E("Failed to mmap the memory using offset received from KMD");

99 return nullptr;

100 }

101

102 return ptr;

103}

104

105int VPUDriverApi::unmap(void *ptr, size_t size) const {

106 return osInfc.osiMunmap(ptr, size);

107}

5.1. buffer_object操作

buffer_object是drm内存管理的核心概念,用户态操作流程如下:

- create bo,使内核态创建buffer object

- info bo,获取bo的信息,如地址等

- mmap,映射内核态bo到用户态

- read/write bo,用户态读写数据

1/// linux-npu-driver/umd/vpu_driver/source/memory/vpu_buffer_object.cpp

2std::unique_ptr<VPUBufferObject>

3VPUBufferObject::create(const VPUDriverApi &drvApi, Location type, Type range, size_t size) {

4 uint32_t handle = 0;

5 uint64_t vpuAddr = 0;

6 if (drvApi.createBuffer(size, static_cast<uint32_t>(range), handle, vpuAddr)) {

7 LOG_E("Failed to allocate memory");

8 return nullptr;

9 }

10

11 void *ptr = nullptr;

12 uint64_t offset = 0;

13 if (drvApi.getBufferInfo(handle, offset)) {

14 LOG_E("Failed to get info about buffer");

15 drvApi.closeBuffer(handle);

16 return nullptr;

17 }

18

19 ptr = drvApi.mmap(size, safe_cast<off_t>(offset));

20 if (ptr == nullptr) {

21 LOG_E("Failed to mmap the created buffer");

22 drvApi.closeBuffer(handle);

23 return nullptr;

24 }

25 /// ptr赋值给VPUBufferObject::basePtr,size赋值给VPUBufferObject::allocSize

26 return std::make_unique<VPUBufferObject>(drvApi, type, range, ptr, size, handle, vpuAddr);

27}

28/// ... ...

29bool VPUBufferObject::copyToBuffer(const void *data, size_t size, uint64_t offset) {

30 if (offset > allocSize) {

31 LOG_E("Invalid offset value");

32 return false;

33 }

34

35 uint8_t *dstPtr = basePtr + offset;

36 size_t dstMax = allocSize - offset;

37

38 if (data == nullptr || size == 0 || dstMax == 0 || size > dstMax) {

39 LOG_E("Invalid arguments. data(%p) size(%ld) dstMax(%ld)", data, size, dstMax);

40 return false;

41 }

42

43 memcpy(dstPtr, data, size);

44 return true;

45}

6. 跨进程访问

假设用户态有A和B两个进程,B需要访问A申请的buffer ojbect,操作步骤如下:

- A使用

DRM_IOCTL_PRIME_HANDLE_TO_FD将fd转为handle - 使用进程间通信将handle发送给B

- B使用

DRM_IOCTL_PRIME_FD_TO_HANDLE将handle转为fd,之后使用ioctl等访问buffer object

1/// drivers/gpu/drm/drm_ioctl.c

2DRM_IOCTL_DEF(DRM_IOCTL_PRIME_HANDLE_TO_FD, drm_prime_handle_to_fd_ioctl, DRM_RENDER_ALLOW),

3DRM_IOCTL_DEF(DRM_IOCTL_PRIME_FD_TO_HANDLE, drm_prime_fd_to_handle_ioctl, DRM_RENDER_ALLOW),

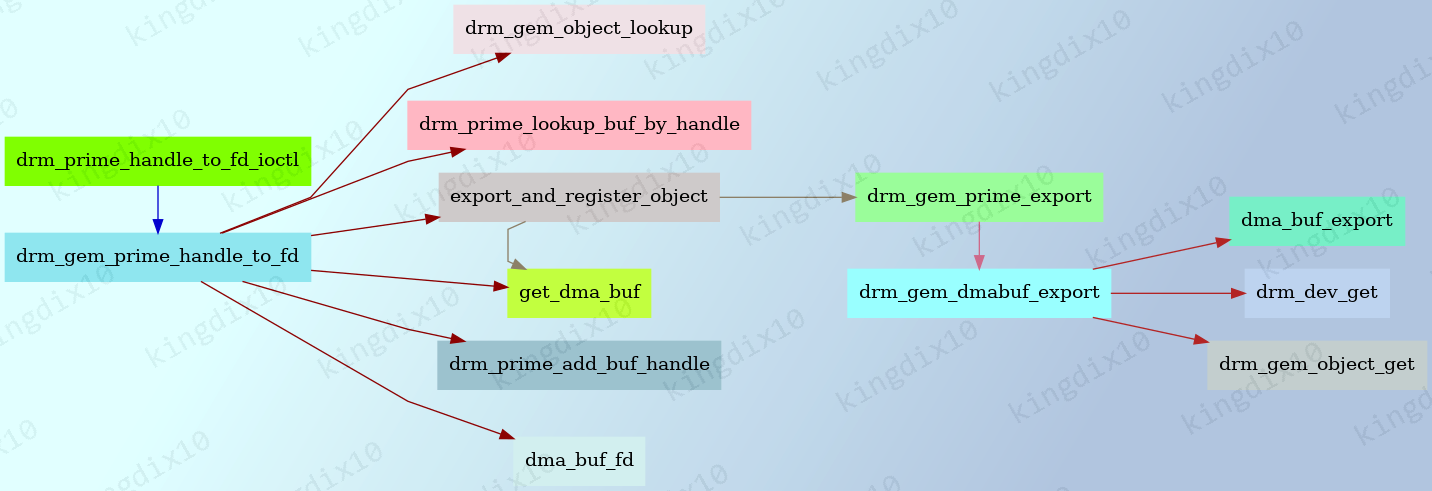

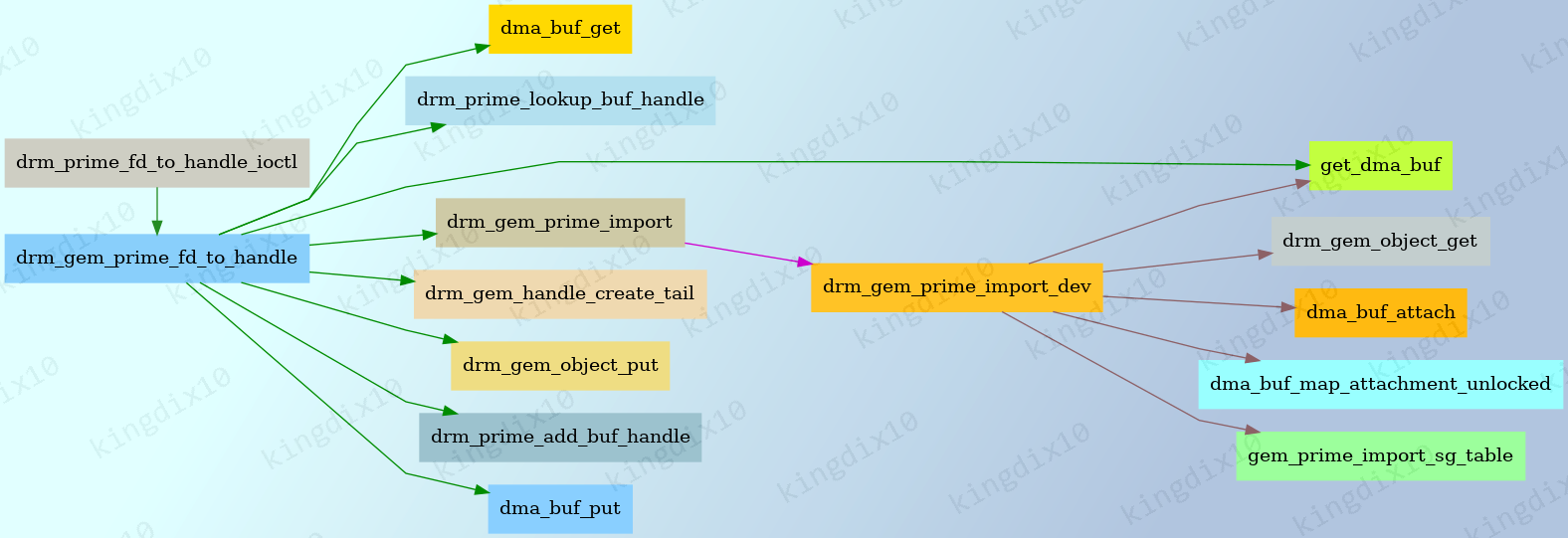

6.1. 内核实现

目前只有drivers/gpu/drm/vmwgfx/vmwgfx_drv.c会自定义prime_fd_to_handle和prime_handle_to_fd回调函数。

1/// drivers/gpu/drm/drm_prime.c

2int drm_prime_fd_to_handle_ioctl(struct drm_device *dev, void *data,

3 struct drm_file *file_priv)

4{

5 struct drm_prime_handle *args = data;

6

7 if (dev->driver->prime_fd_to_handle) {

8 return dev->driver->prime_fd_to_handle(dev, file_priv, args->fd,

9 &args->handle);

10 }

11

12 return drm_gem_prime_fd_to_handle(dev, file_priv, args->fd, &args->handle);

13}

14/// ... ...

15int drm_prime_handle_to_fd_ioctl(struct drm_device *dev, void *data,

16 struct drm_file *file_priv)

17{

18 struct drm_prime_handle *args = data;

19

20 /* check flags are valid */

21 if (args->flags & ~(DRM_CLOEXEC | DRM_RDWR))

22 return -EINVAL;

23

24 if (dev->driver->prime_handle_to_fd) {

25 return dev->driver->prime_handle_to_fd(dev, file_priv,

26 args->handle, args->flags,

27 &args->fd);

28 }

29 return drm_gem_prime_handle_to_fd(dev, file_priv, args->handle,

30 args->flags, &args->fd);

31}

6.1.1. fd_to_handle

6.1.2. handle_to_fd